How should we approach the AI revolution?

Reporting from the PubCon event, Cait Cullen asks: is artificial intelligence a threat to integrity or the herald of new opportunities?

At IOP Publishing's recent international colleague conference, PubCon, the discussion turned to the elephant in the room: artificial intelligence (AI). The use of AI in scholarly publishing creates a host of opportunities but also poses many challenges and integrity issues. As academic publishers, how should we approach the AI revolution?

Chaired by Kim Eggleton, Head of Research Integrity and Peer Review at IOP Publishing, a panel of industry experts included Fabienne Michaud, Product Manager at Crossref; Dustin Smith, Co-founder and President of Hum; Dr Matt Hodgson, Associate Lecturer at the University of York; and Lauren Flintoft, Research Integrity Officer at IOP Publishing. The panel expressed a mix of excitement and concern on the use of AI in research and publishing.

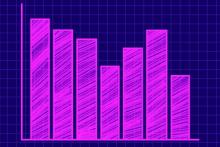

To gauge the temperature in the room at the start and end of the session, a poll was carried out with IOP Publishing colleagues invited to anonymously submit their feelings on AI. Opinions were very mixed, with many feeling sceptical or concerned about the implications of adopting AI in publishing. On a scale of 1 to 10, where 1 is “terrified” and 10 is “very excited”, the average initial feeling on the impact of AI in scholarly publishing was 5.25. By the end of the session, the poll indicates that guests were generally left feeling more informed, enthusiastic, and conversational on the topic, with the average score rising to 6.17. But what are the problems and opportunities associated with the use of artificial intelligence tools in academic publishing?

Opportunities for AI in scholarly publishing?

Introduced by Kim Eggleton as ‘Captain Optimism’ for his positive view on the use of AI, Dustin Smith said he believes that tools such as large language models (LLMs) can help us to broaden and engage our audience. “AI tools have the potential to streamline our workflows, allowing us more time to focus on outstanding issues such as research integrity. AI tools can help to identify potential topics for special issues and collections, single out areas where we should be commissioning more content, and analyse lulls and booms in publishing conversations on a global scale,” he said.

“When we talk about AI, most people immediately think of ChatGPT. But ChatGPT is a consumer grade product that was not made for publishing. The real opportunity for publishers are the fined-tuned AI products specifically made for academic and scholarly use,” Smith continued: “With these products many of the major concerns around AI use can be bypassed and there are opportunities to take a step forward with some of the longest standing publishing challenges. For example: we can add restrictions to prompts asking only for cited content or give LLMs access to tools (such as simple calculators or sophisticated databases).”

One example of creating opportunity with AI is to generate summaries of papers, explained Smith. “Some publishers are using GPT-4, ChatGPT's more capable successor, to generate "lay summaries" of academic papers for their magazines. However, these summaries must still be validated by a human professional to ensure accuracy.”

Dr Matt Hodgson also believes in the benefits of AI for research and scholarly publishing. He advocates that AI tools can be used to boost the accessibility of science, allowing dissemination of content to a wider audience. He adds that “AI should be used as a tool to enhance and support human capabilities, not to replace them. To stay competitive as a researcher or publisher you must embrace new technologies, that has always been the case. With AI, it simply feels a little different owing to its advanced capabilities, which can make us feel somewhat threatened. However, remember that AI itself won’t take your job – but someone using it will.”

What are the threats to scholarly publishing from AI?

The jury is still out on AI in academic publishing, argued Fabienne Michaud: “AI is here to stay, but, when it comes to policies and processes, we are not quite there yet. As an industry, we must combine our efforts to establish a base of ethical guidance and training.”

She continued: “Academic publishing is built on a foundation of trust and integrity, and when it comes to AI, one of the biggest threats is its lack of originality, creativity, and insight. AI writing tools were not built to be correct. Instead, they are designed to provide plausible answers. This is in juxtaposition to the values that form the foundation of scholarly publishing.”

Speaking from experience, Lauren Flintoft also expressed some trepidation: “AI tools rely on regurgitated information from other sources that can often be outdated and incorrectly cited. We know that AI tools are sometimes fabricating content and not correctly citing their sources. Instead, they make up seemingly plausible references that don’t lead to credible, or even real, academic texts. Without proper monitoring, this inaccurate content AI generates, called ‘hallucinations,’ could pollute the scholarly record with false information. It is not possible for humans to detect every instance of AI generated content, and it is unfair to expect this.”

Flintoft also expressed concerns about the ethical issues with using AI as an author: “AI tools don’t currently consider permissions required for third party content they draw upon, nor can they take responsibility for their work; both are actions regularly expected of academic authors. Furthermore, there will likely need to be updates made to UK copyright law to fully support the use of AI content, and those using them must still be mindful of permissions. Robust frameworks need to be put in place in order for AI tools to be ethically used.”

Where does the responsibility lie for enforcing proper use of AI tools?

Hodgson concluded: “AI will continue to grow, as both a tool and a concern within the industry. Therefore, we need some level of governance to ensure it is used safely, fairly, and responsibly and that responsibility should not lie solely with publishers. We must encourage more active conversations around the ethics and integrity issues of AI within academia. The most immediate threat is amongst students and early career researchers who, at this stage, often do not see beyond the excitement."

Despite the varying experiences and outlooks of the panel, by the end of the session one message was clear: AI will play a role in our future, and so we must approach it together with shared responsibility.

Cait Cullen is communications officer at IOP Publishing

Do you have any thoughts about AI in scholarly communications that you would like to share with Research Information readers? Get in touch with tim.gillett@europascience.com