AI challenges for librarians

Darrell Gunter takes a critical look at racial bias and ethics in artificial intelligence

Allow me to share two stories with you.

The first story is about a town that had a bad fire. The published story stated that the town experienced a tremendous fire that burned everything down to the ground. Fortunately, no one was seriously injured. The town mayor estimated rebuilding would take 18 to 24 months.

This is the truth by this reporter that will stand for generations. But what if it was not the truth……?

Truth is in the balance of the story reported by the journalist. What is truth? Whoever is writing the story is the arbiter of the truth. But what if this “so-called” arbiter of the truth chose to omit key “truths”? Suppressing the truth of a story/article can significantly alter the reader’s knowledge and perception of the article. Truth omitted changes the narrative. Facts and truth matter. If we allow the facts and truth to be altered, the current and future generation's knowledge about a specific topic will be forever diminished.

Considering the omissions, I must ask the question, “generationally, what type of human beings are we creating” if this is allowed. It can be argued that we are creating a generation of human beings that are operating on diminished information and their daily decisions will be based on this diminished information.

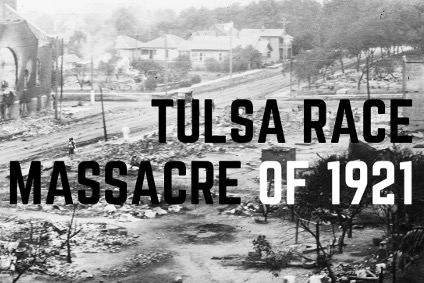

Can you imagine if the Tulsa Race Massacre of of 1921 was reported as just a bad fire versus the reality that it was a massacre that claimed the lives of 32 people and thousands were injured? The omission of truth and facts would diminish the humanity of this very disturbing event.

Language matters. Words matter. Words grouped together create a context. Remember this statement: “content in context”. Algorithms are made up of words and directions to achieve an information hypothesis. This is one example of the challenges we as a community face with AI.

The current landscape for librarians

The article The Challenges of Being a Librarian shows that librarianship has pros and cons like all professions. Published by EveryLibrary, the article lists 15 items as everyday annoyances that the librarian faces. However, we must add AI bias and ethics to this list – as well as copyright.

AI did not start with chat GPT. Artificial intelligence (AI), sometimes called machine intelligence, is intelligence demonstrated by machines, in contrast to the natural intelligence displayed by humans and other animals. In computer science AI research is defined as the study of "intelligent agents": any device that perceives its environment and takes actions that maximise its chance of successfully achieving its goals.

The term artificial intelligence was first coined by John McCarthy in 1956 – but the journey to understand whether machines can honestly think began before that. In Vannevar Bush's seminal work, As We May Think, he proposed a system that amplifies people's own knowledge and understanding.

Bush expressed his concern for the direction of scientific efforts toward destruction rather than understanding and explicates a desire for a sort of collective memory machine with his concept of the memex that would make knowledge more accessible, believing that it would help fix these problems. Through this machine, Bush hoped to transform an information explosion into a knowledge explosion. Five years later, Alan Turing wrote a paper on the notion of machines being able to simulate human beings and the ability to do intelligent things such as play chess.

Definitions of AI

Librarians, like many professionals in various fields, encounter both opportunities and challenges with the integration of AI services into their work. Here are some of the key issues that librarians may face with AI services, particularly concerning ethics and accuracy:

- Algorithmic bias: AI systems can inherit biases present in the data used to train them. Librarians may need to be cautious about the potential biases in the datasets that power AI tools, especially regarding information retrieval. If the training data contains biases, the AI system may perpetuate and amplify those biases, leading to biased search results.

- Privacy concerns: AI tools often rely on vast amounts of data to improve their performance. Librarians must consider the privacy implications of collecting and using patron data to enhance AI services. Ensuring compliance with privacy regulations and protecting user data from misuse is crucial.

- Ethical use of AI: Librarians are responsible for ensuring that AI services are ethically deployed and aligned with professional and ethical standards.

- Accuracy and reliability: Librarians need to assess the accuracy and reliability of AI-generated information.

- User education: Librarians may face the challenge of educating users about the limitations and capabilities of AI services.

- Limited understanding of AI: Some librarians may have limited understanding of AI technologies, which can pose a challenge in effectively integrating these tools into library services.

- Resource allocation: Implementing and maintaining AI services may require additional resources, including financial investments, training programs, and ongoing support.

- Digital divide: The use of AI services in libraries may exacerbate existing digital divides if certain user groups lack access to technology or have limited digital literacy skills. Librarians need to be mindful of inclusivity and work towards providing equitable access to AI-enhanced services.

- Representation in training data: If the training data used to develop AI services lacks diversity, it can result in biased algorithms. Librarians should advocate for diverse and representative datasets to mitigate the risk of perpetuating racial biases in AI systems.

- Fairness and equity: Librarians must ensure that AI services are designed and deployed with fairness and equity in mind.

- Transparency: Librarians should advocate for transparency in AI algorithms and decision-making processes. Understanding how AI systems work is crucial for identifying and addressing potential biases, including race-related ones.

- Community engagement: Librarians can engage with their communities to understand their perspectives and concerns related to AI and racial bias.

- Education and awareness: Librarians play a role in educating both staff and users about the potential biases in AI systems and how they can impact different racial and ethnic groups.

- Ongoing monitoring and evaluation: Librarians should continuously monitor and evaluate the performance of AI services to identify and address any emerging issues related to racial bias.

In addition to the bias and ethical challenges, there is still the issue of copyright. Keri Mattaliano of Copright Clearance Center wrote about 5 AI-Related Topics Every Information Professional Should Think About in 2024 :

- Copyright questions about using AI in content

- Licensing content for AI

- Company guidelines and strategic directives around technologies using AI

- Budget ambiguity

- Stay current

Allow me to add another important term for us to be aware of algorithms of oppression. Here is a short video titled, Algorithms of Oppression: by Safiya Umoja Noble that provides an excellent overview of this topic. By actively addressing these issues, librarians can contribute to the development and use of AI services that are more ethical, equitable, and inclusive, reducing the risk of perpetuating racial biases in library systems.

There are many known cases of AI bias. United Health has been in the news for lawsuits that allege faulty algorithms that deny care to patients. Nvidia staffers warned its CEO of the threat AI would pose to minorities. These are just a few of hundreds of examples of AI algorithms not working in an ethical and non-bias manner, which clearly demonstrate the challenges the librarians and, just as importantly, our global community.

The solutions: help is here

On the website AI Ethicist, there are 76+ organisations that are addressing AI bias and ethics. One of the organisations is the Algorithmic Justice League led by Ms. Joy Buolamwini – she is featured in the Netflix documentary titled Coded Bias, www.codedbias.com. She also authored the book, Unmasking AI: My Mission to Protect What Is Human in a World of Machines. Another organisation is the Center for AI and Digital Policy, led by Merve Hickok.

As you can see, the topic of AI is huge and will require a full court press of our community to ensure that we are doing everything to fight back the bad actors and poor algorithms. Librarians are the one group of people who are on the front lines everyday helping students, faculty, administrators, researchers, citizens, etc., with their various information needs. In the ASERL webinar, I presented this topic to an audience of 170+ librarians and I suggested to them the following next steps:

- Awareness - To be very intentional in learning more about AI around bias and ethics.

- Education - To provide education and training to their constituents

- Engagement - To encourage their institution to engage with AI community to have a very active dialogue around bias and ethics

- Action - To be proactive to address known AI bias and ethics issues.

Once these issues have been resolved, it is important to communicate the positive results to the community. This will demonstrate to the AI technical community that the global community is watching and will take action to correct algorithms that are not productive will be called out.

I am also asking all global citizens to do the same. Please say something to the organisations focused on correcting these algorithms if you see something. If we take these actions, we will make our world a better place.

Darrell Gunter is CEO of Gunter Media Group, and the author of Transforming Scholarly Research with Blockchain Technologies and AI.